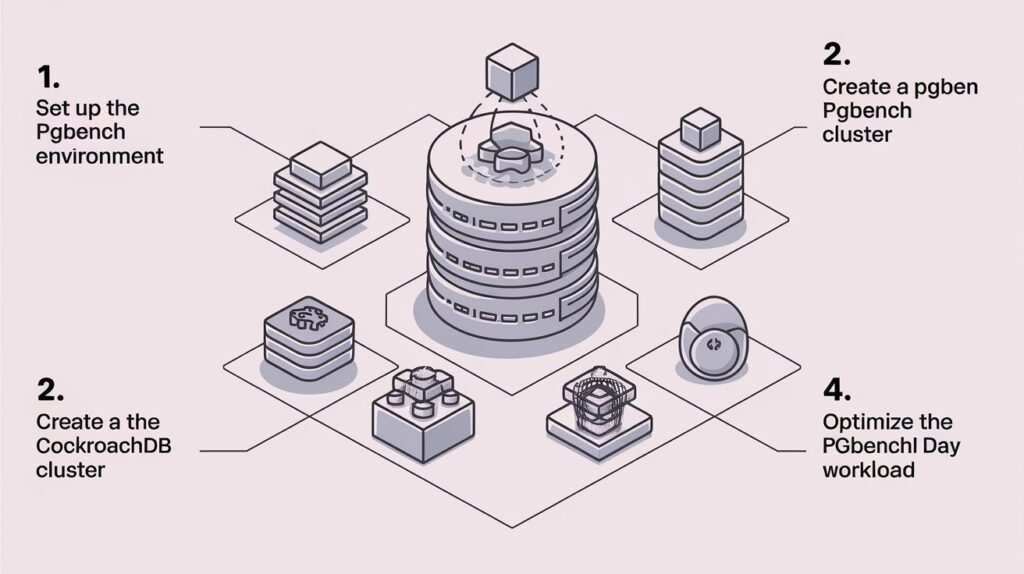

Regarding benchmarking distributed databases like CockroachDB, pgbench is a valuable tool for measuring and optimizing performance. In this third part of our series on Optimizing pgbench for CockroachDB Part 3, we dive deeper into advanced strategies to enhance your benchmarking results and improve overall performance. Whether scaling your cluster or fine-tuning queries, understanding the critical levers is vital to making CockroachDB work efficiently for your needs.

What is pgbench?

Before diving into optimizations, let’s review pgbench. It’s a benchmarking tool commonly used for PostgreSQL but also applicable to CockroachDB due to its compatibility with the PostgreSQL wire protocol. Pgbench helps simulate transaction workloads and provides a performance baseline by running simple SQL commands in bulk. However, just running pgbench with proper tuning will give you the complete picture of CockroachDB’s potential.

Key Areas of Optimization

- Cluster Size Matters

The cluster size is one of the most critical factors in Optimizing pgbench for CockroachDB Part 3. CockroachDB is designed as a scalable, distributed database, so increasing your node count can improve performance. However, there’s a trade-off between the cost of additional nodes and the performance gain. Start by running pgbench with a smaller cluster, then gradually scale up. This allows you to pinpoint the optimal throughput size without unnecessary resource expenditure.

- Tuning Client Connections

Pgbench offers the ability to simulate multiple client connections, which is critical for testing concurrency. For CockroachDB, a distributed system, testing how it handles thousands of concurrent connections is vital. Too few client connections can underutilize the system, while too many can overload it, leading to degraded performance. The sweet spot for Optimizing pgbench for CockroachDB Part 3 is often found by gradually increasing the number of clients until you see diminishing returns in transaction throughput.

- Network Latency and Node Distribution

Distributed systems like CockroachDB rely heavily on network performance. When using pgbench to measure CockroachDB performance, ensure your nodes are distributed efficiently across your network. Network latency can significantly impact performance, especially for transactional workloads. Running pgbench across geographically dispersed nodes gives you a better understanding of real-world performance but keeps latency to a minimum for benchmarking accuracy.

- Query Optimization

Although pgbench runs simple queries, optimizing these queries can drastically improve the benchmarking results. Use the EXPLAIN ANALYZE feature to check query execution plans and identify slow-running queries. Optimizing the structure of these queries or adding appropriate indexes can make CockroachDB respond faster, especially for large datasets.

To maximize efficiency, create indexes on frequently accessed columns. This reduces CockroachDB’s time to scan tables and fetch results during benchmarking.

- Experimenting with Replication Factor

CockroachDB automatically replicates data across nodes to ensure fault tolerance. While this is crucial for data durability, it can add latency in a distributed setup. Adjusting the replication factor based on your benchmarking workload can help balance fault tolerance and performance. A lower replication factor might yield faster results in pgbench tests, but consider the risk of data loss in case of node failure.

Also Read: Your Topics | Multiple Stories

- Scale Factor and Data Size

When using pgbench, the scale factor determines the size of the dataset that will be generated. The scale factor should match the size of your anticipated production dataset. If your dataset is small, CockroachDB may perform well even with minimal optimization. However, as your data grows, tuning becomes more important. A more prominent scale factor will stress the database more, revealing potential bottlenecks and allowing you to tune the system appropriately.

- Transaction Rate Tuning

The rate parameter in pgbench controls the number of transactions per second during benchmarking. Increasing the transaction rate helps stress-test the system, but doing so without proper tuning can create artificial bottlenecks. Gradually increase the transaction rate while monitoring system performance metrics to determine how CockroachDB responds under pressure.

Advanced Monitoring and Profiling

Monitoring Resource Utilization

Use CockroachDB’s built-in monitoring tools to track key performance metrics such as CPU usage, memory consumption, disk I/O, and network latency. Understanding how these resources are used during a pgbench run can reveal areas for optimization. If you notice a high CPU or disk I/O, it may be a sign that CockroachDB is bottlenecked regarding resource availability rather than query execution.

Profiling Queries with pgbench

Profiling features in pgbench can help identify slow queries or performance hotspots. You can tweak indexes, adjust SQL syntax, or reduce query complexity by analyzing these queries to improve CockroachDB’s throughput.

Importance of Caching

CockroachDB benefits significantly from caching, especially when running frequent, repetitive queries. While pgbench simulates a workload, enabling efficient caching can improve CockroachDB’s performance. Consider using caching layers or relying on CockroachDB’s internal caching mechanisms to improve query speeds during benchmarking.

Iterative Optimization is Key

Optimizing pgbench for CockroachDB is not a one-time process. It requires iteration. Start with a baseline by running pgbench on default settings, then tweak configurations like client connections, transaction rates, and query optimization incrementally. Each iteration should provide new insights into how CockroachDB performs under different loads and configurations.

Conclusion

In this third part of our series on Optimizing pgbench for CockroachDB Part 3, we’ve covered critical areas such as cluster size, query optimization, and transaction rate tuning. Each factor is crucial in improving CockroachDB performance when running pgbench benchmarks. Keep in mind that optimization is a continuous process. Each workload is unique, and what works for one setup might not apply to another. By carefully analyzing each element of your system and testing configurations, you can ensure that your CockroachDB setup runs at its best.

This guide offers a structured approach to fine-tuning your CockroachDB benchmarking process, ensuring both efficiency and performance as you continue optimizing pgbench for CockroachDB.